May the Best (Operating) Models Win

Introducing the Innowaring Model — A Framework for Accelerating AI-Native Products and Systems Innovation

The Gen-AI revolution is running into an innovation deficit.

According to Gartner, 30% of enterprise Gen-AI projects will be abandoned by the end of 2025—stuck in the PoC phase, never making it to production. Meanwhile, Gen-AI startups are struggling to differentiate. Their products face commoditization risk from tech incumbents, and their innovations aren’t translating into real-world usage.

Most analyses point to the usual “readiness” or “maturity” challenges common to every new tech cycle: technical limitations, messy data, inefficient processes, unclear ROI, slow-moving orgs. But these analyses miss the mark.

At a deeper level, they make a categorical error.

Gen-AI isn’t just the next wave like Cloud or Mobile. It is a fundamentally different technology wave than the prior ones. And it is tearing up the playbooks of technology innovation in ways we’re only beginning to understand.

From Tools to Systems: What Makes Gen-AI Different

Gen-AI isn’t a technological invention like Cloud or Mobile. As Jeff Bezos puts it, it's a discovery that scaling up AI models makes them surprisingly powerful general-purpose completion machines.

This discovery has unleashed a wave of foundational models that can handle a wide range of tasks. But there’s a catch: those tasks must first be converted into completion problems. That conversion step is the most exciting and frustrating aspect of working with Gen-AI.

It’s exciting because so much of knowledge work can be reframed this way. Writing emails or code, summarizing documents, answering questions, and even navigating enterprise knowledge—many of these tasks translate well into completion tasks.

And unlike traditional machine learning AI, foundational AI models dramatically reduce the burden of task-specific training. You no longer need thousands of labeled examples. Often, 15–20 well-chosen examples are enough to get you 80% of the way.

Once these models became available via API, spinning up PoCs became trivial and accessible to teams and organizations of any size.

But turning those PoCs into high-impact systems is another story altogether. Real work workflows span multiple tasks, roles, and decision points in a nonlinear way. They depend on nuance, domain context, and reliability. And here, achieving the last 20% of performance can take 80% of the effort.

That is the frustrating part because model and agent outputs are probabilistic, not deterministic. We’re no longer writing fixed logic. We are shaping system behavior through prompts, examples, external knowledge, reasoning scaffolds, and finely tuned input/output constraints in the UI. Achieving the correct behavior requires a lot of trial and error.

The more time I spend helping teams productionize AI models and agents for enterprise use cases, the clearer it becomes:

We’re not just adopting a new technology. We’re learning entirely new design patterns for building software systems—AI-native systems.

From PoCs to Business Impact: Why Operating Norms Matter More Than You Think

Gen-AI’s value can’t be unlocked by simply dropping a model or agent into a workflow or running a string of incremental PoCs. It requires clear strategic intent: a deliberate effort to define and imagine the kinds of problems that have now become solvable with general-purpose models.

Without that, it’s far too easy to end up with 100 disconnected PoCs and nothing to show for them.

The so-called “lack of real Gen-AI use cases” isn’t a flaw in the technology. It reflects a deeper challenge: Our operating norms for product and systems innovation must catch up quickly. And more than the Cloud or Mobile waves before it, Gen-AI demands a fundamental shift in how we think, build, and collaborate.

Gen-AI demands cross-functional collaboration at a depth we haven’t seen before. A business user can shape system behavior with a prompt. A support agent can spot what needs fine-tuning. Product managers can code. Engineers can rethink entire workflows. The ideas can come from anywhere.

This democratization of capability blurs traditional roles. That’s powerful—but only if teams are clear about ownership and collaboration. The best AI use cases won’t be defined in silos. They will be co-created by product, engineering, compliance, legal, and domain experts.

Once a use case is in motion, the teams that win will be those operating in tight, iterative feedback loops with real users, not those following rigid delivery plans.

Julie Zhuo, former VP of Design at Facebook, advocates a “prototype and prune” approach: Start with “code-first prototypes” and spend most of your time testing “whether an idea has legs.”

Despite all the time and energy spent on Agile transformations, Julie's approach is still foreign to most enterprise environments, where product and system development tends to be linear and driven by documentation, planning, and gated approvals.

But in the world of Gen-AI, discovering and scaling high-value use cases is less about technology and more about whether an organization can foster a trust-based culture that allows people to explore, adapt, and learn in real time.

Introducing the Innowaring Model: A Principles-First Approach for AI-Native Product and Systems Innovation

The Gen-AI innovation deficit exists because most teams are approaching foundational models and AI agents the wrong way. They treat them as an add-on to existing systems and ways of working. But making the most of the Gen-AI shift requires something different: a challenge to the operating norms that shape how organizations build, ship, and scale technology.

That’s where the Innowaring Model comes in.

It’s a principles-first framework for scaling AI-native products and systems innovation, not by layering AI onto legacy structures, but by rethinking how organizations operate with AI at their core.

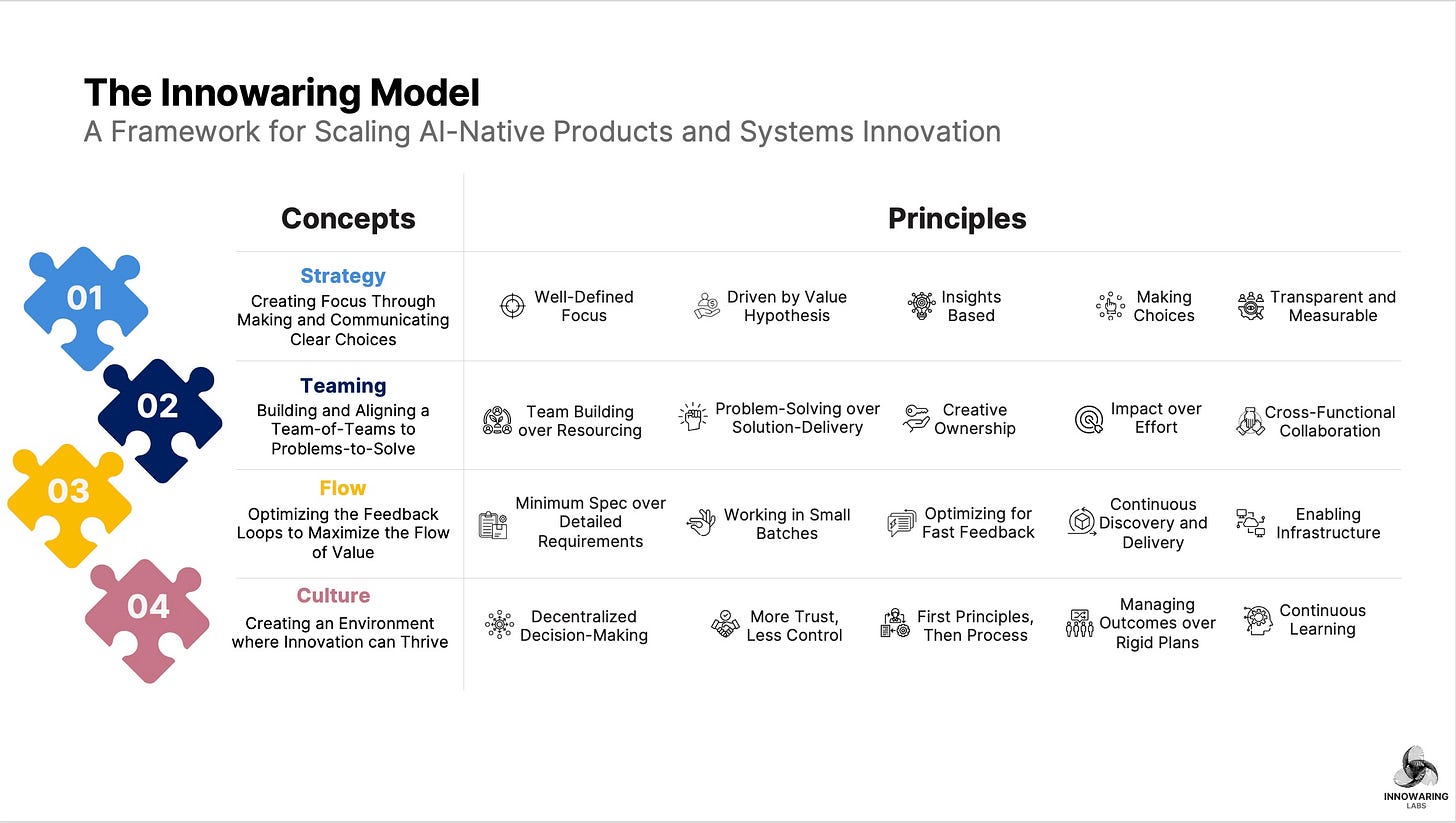

The model builds on the foundational principles I introduced in my 2023 book, Becoming a Software Company, and integrates emerging thinking around Gen-AI innovation. It’s organized around four core concepts: Strategy, Teaming, Flow, and Culture—each supported by principles designed to help organizations thrive in the Gen-AI era.

Why am I placing a special emphasis on principles? They provide stable ground in a fast-moving landscape. The fundamentals of building innovative software systems haven’t changed, but AI raises the stakes. It creates sharper and more urgent imperatives for how we define strategy, organize teams, deliver value, and evolve culture.

I won’t restate every concept and principle (I have done it here: Link 1, Link 2, Link 3, Link 4, Link 5. Instead, I will review the Gen-AI impact through various Innowaring concepts and principles, and outline imperatives for transforming the operating model for AI-Native products and systems innovation.

The Innowaring Imperatives

Focus on the Right Problems

Foundational AI models have massively expanded the solution space for the enterprise, making it easier to build but harder to focus. That makes defining the right problems an existential priority. Strategic focus isn’t optional; it’s the only way to avoid a graveyard of impressive but irrelevant AI pilots.

Achieve Differentiation Through Workflow Innovation and Solving User Problems

The edge isn’t in the model or agents—it’s in reimagining workflows and solving problems for user networks. That kind of innovation doesn’t come from roadmaps—it comes from live experimentation. Teams need the space and context to discover unique, high-value opportunities at the edge of real work.

Avoid the Solutionist Trap

AI tends to amplify the solution bias. Teams chase what’s familiar, wrapping models around problems others are solving, or that are easy to solve, instead of exploring what’s uniquely valuable to their target users. To enable them, strategic intent must be transparent, so teams can align around what truly matters.

Build Smaller, Smarter Teams

Gen-AI compresses timelines and blurs traditional role boundaries. Product, design, and engineering are converging. The most effective teams are smaller, more autonomous, and operate as cross-functional units with fewer handoffs and more context.

Continuously Improve at Working in Small Batches

Cloud and mobile taught us to work in small batches. AI demands tight feedback loops with users. Shaping behavior with foundational models is inherently nonlinear. The smaller your batch, the faster you learn what works and what doesn’t.

Invest in the AIOps Infrastructure

The AI-native stack goes beyond code and data. It now includes prompts, retrieval pipelines, user simulations, real-time feedback capture, and evaluation frameworks. AIOps isn’t optional—the infrastructure layer enables fast iteration at scale.

Optimize the Information Flow for Value Flow

You don’t define perfect requirements up front for AI-Native development—you explore messy ideas and converge through learning. That requires short development with short feedback loops with users. The faster you can test and discard ideas, the quicker you can build real value.

Cultivate Trust-Based Autonomy

Achieving high velocity in AI-Native development demands trust. The people closest to the work must be empowered to make decisions. Command-and-control slows everything down. Managing through trust isn’t just a soft skill—it’s a hard requirement with AI.

Right-Size Processes

Legacy processes often get lifted and shifted into new environments. That was flawed in the cloud era—and it’s fatal in the AI era. Your process must exist only to improve the cycle time for feedback, not as a control artifact. Here, culture, what it allows and doesn’t allow, is your differentiator.

Embrace Experimentation and Adaptation

Software innovation has moved steadily up the stack—from infrastructure to apps. AI is pushing it further into the heart of business workflows. Success depends not on mastering the models or agents, but on creating an organizational culture and systems that support workflow innovation at the edge of work.

From Innovation Deficit to Innovation Discipline

The Gen-AI innovation deficit isn’t a failure of technology. It’s a failure of imagination, execution, and adaptation.

Foundational AI models have opened up extraordinary new possibilities, but realizing their value depends entirely on how we build with them. It requires a new kind of AI-native system thinking: reshaping teams, rethinking strategy, and evolving culture and process to unlock the next wave of software innovation.

That’s where the Innowaring Model comes in—a thinking tool to help organizations move beyond admiration and into action. Grounded in principles, it offers a path out of PoC purgatory and toward building real systems that create lasting business value.

The next era of enterprise innovation won’t be led by those who simply use AI.

It will be led by those who know how to build with it, at scale, with purpose, and on principle.